Business

Audio Censorship and Content Moderation: The Invisible Hand Shaping Digital Soundscapes

The digital landscape has transformed how we create, share, and consume audio content, but this transformation comes with unprecedented challenges around content moderation and censorship. Audio content that was once confined to controlled environments like movie theaters or television broadcasts now circulates freely across global platforms where diverse audiences with varying cultural sensitivities encounter content without contextual preparation or age-appropriate filtering.

Platform-specific content policies have emerged as powerful forces shaping creative decisions, influencing everything from independent content creators uploading gaming videos to major entertainment productions designed for multi-platform distribution. These policies create a complex web of restrictions and guidelines that content creators must navigate, often forcing creative compromises that prioritize platform compliance over artistic vision.

The challenge extends beyond simple compliance issues. Audio censorship policies are inconsistently applied, frequently updated, and often implemented through automated systems that lack the nuance to distinguish between legitimate creative content and potentially harmful material. This creates an environment of uncertainty where creators must make conservative choices to avoid algorithmic penalties that could devastate their reach and revenue.

The Evolution of Platform Audio Policies

Major social media platforms began implementing audio content restrictions as their user bases expanded and regulatory pressure increased. What started as basic profanity filters has evolved into sophisticated systems attempting to identify and moderate complex audio content including violence-related sounds, copyrighted material, and culturally sensitive audio elements.

YouTube’s approach to audio moderation has become particularly influential given its dominance in video content distribution. The platform’s policies regarding weapon-related audio content have forced gaming content creators to modify or remove certain sound effects from gameplay footage, leading to an entire cottage industry of “clean” audio editing services that sanitize content for platform compliance.

TikTok’s shorter-form content model presents different challenges, as the platform’s algorithm-driven discovery system can amplify or suppress content based on audio characteristics that users may not even consciously notice. The platform’s policies around violence-related audio content have influenced music production and sound design choices, with creators increasingly avoiding certain sonic elements that might trigger content suppression.

Impact on Gaming and Interactive Media

The gaming industry faces particularly complex challenges as violent content forms a central component of many popular game genres. Developers creating content intended for streaming or user-generated content must consider how their audio design choices will translate to platform-compliant gameplay footage.

Some game developers now include “streamer-safe” audio options that automatically replace potentially problematic sound effects with platform-friendly alternatives. This approach acknowledges the reality that user-generated content and streaming have become essential marketing channels that require specific audio considerations during development.

Esports and competitive gaming present additional complications, as the authentic audio experience that provides competitive advantages to players may conflict with broadcasting standards required for mainstream platform distribution. Tournament organizers frequently implement separate audio mixes for live audiences versus broadcast streams to navigate these competing requirements.

Creative Adaptation and Workaround Strategies

Content creators have developed numerous strategies to maintain creative integrity while complying with platform restrictions. Audio replacement techniques allow creators to substitute problematic sound effects with acceptable alternatives during post-production, though this approach requires additional time and resources that smaller creators may not possess.

Symbolic sound design has emerged as a creative response to censorship concerns, where creators use abstract or stylized audio elements to convey violent actions without triggering automated moderation systems. This approach has led to innovative sonic vocabularies that communicate intended meanings through implication rather than literal representation.

The use of music and ambient sounds to mask potentially problematic audio elements has become a common technique, though this approach can compromise the clarity and impact of original content. Creators must balance artistic vision with the practical necessity of maintaining platform visibility and monetization opportunities.

Regional and Cultural Variations in Moderation

Content moderation policies vary significantly across different geographic regions and cultural contexts, creating additional complexity for creators attempting to reach global audiences. Audio content that meets acceptance standards in one region may face restrictions in others, forcing creators to either limit their geographic reach or create multiple versions of the same content.

The handling of gun sounds represents a particularly complex example of these regional variations, as different cultures and legal systems have dramatically different relationships with firearm-related content. What might be considered routine entertainment audio in one context could be deemed inappropriate or potentially harmful in another.

Economic Implications and Market Forces

The economic impact of audio censorship extends beyond individual creator revenues to influence entire industries and creative ecosystems. Music producers increasingly consider platform policies during composition and production phases, avoiding sonic elements that might limit distribution opportunities or reduce algorithmic promotion.

Sound effects libraries and audio production companies have adapted their offerings to include “platform-safe” alternatives to potentially problematic content, creating new market segments focused on compliance rather than pure creative expression. This shift represents a fundamental change in how audio content is conceived and produced for digital distribution.

Technological Solutions and Future Developments

Artificial intelligence and machine learning technologies promise more sophisticated content moderation capabilities that could better distinguish between legitimate creative content and genuinely harmful material. However, these same technologies raise concerns about increased automation in creative decision-making and the potential for more pervasive censorship.

Context-aware moderation systems that consider factors like content genre, target audience, and creator history could provide more nuanced approaches to audio content evaluation. These systems might enable more appropriate treatment of creative content while maintaining necessary protections against genuinely harmful material.

The ongoing development of decentralized content platforms and blockchain-based distribution systems offers potential alternatives to traditional platform censorship, though these approaches introduce their own technical and practical challenges.

The tension between creative freedom and content moderation will likely intensify as audio content becomes increasingly central to digital communication and entertainment, requiring ongoing dialogue between creators, platforms, and regulatory bodies to balance competing interests and values.

-

Celebrity1 year ago

Celebrity1 year agoWho Is Jennifer Rauchet?: All You Need To Know About Pete Hegseth’s Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Mindy Jennings?: All You Need To Know About Ken Jennings Wife

-

Celebrity1 year ago

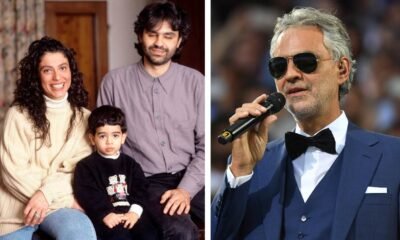

Celebrity1 year agoWho Is Enrica Cenzatti?: The Untold Story of Andrea Bocelli’s Ex-Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Klarissa Munz: The Untold Story of Freddie Highmore’s Wife