Tech

The Hidden Truth About Diffrhythm: What Two Weeks of Real-World Testing Actually Reveals

The promise sounds almost too good to be true: type some words, describe a vibe, and walk away with a fully produced song complete with vocals and instrumentation. When AI music generators first emerged, the reaction from creators oscillated between fascination and skepticism. Could a machine really understand the emotional weight behind a minor chord progression? Could it capture the vulnerability in a whispered verse or the explosive energy of a chorus drop?

After spending two weeks living with Diffrhythm AI—pushing it in directions it probably wasn’t designed for, feeding it everything from heartbreak ballads to experimental electronic concepts—the discoveries were neither a magic button that replaces musicians (spoiler: it doesn’t) nor a disappointing gimmick. What emerged was something more nuanced and, frankly, more interesting than either extreme.

The First Impression: Simplicity That Feels Suspicious

The initial encounter with Diffrhythm feels almost anticlimactic. No tutorial videos to watch, no parameter menus to navigate, no presets to audition. Just a text box for lyrics, another for style description, and a generate button. Coming from years of wrestling with digital audio workstations, this minimalism feels suspicious—like ordering a gourmet meal and receiving only salt and pepper as seasoning options.

But here’s the revelation: constraints breed creativity differently than abundance does. When you can’t tweak every reverb tail and compressor threshold, the focus shifts to the concept rather than the *execution*. You become a director rather than an engineer, and that shift fundamentally changes the creative process.

What Happens Behind That “Generate” Button

Understanding how Diffrhythm music actually works unlocks its potential. Unlike older AI models that build songs sequentially (verse, then chorus, then bridge), this system uses latent diffusion technology—essentially creating a compressed “idea” of the entire song first, then expanding that idea into actual audio.

Think of it like this: a traditional approach is like writing a story one sentence at a time without knowing the ending. Diffrhythm’s method is more like outlining the entire narrative arc, then filling in the prose. The result? Musical elements that reference each other across the composition. Motifs that return. Transitions that feel intentional rather than arbitrary.

In comparative testing, two versions of identical lyrics were generated—one with Diffrhythm AI music, another with a popular sequential generation tool. The difference was subtle but significant. The Diffrhythm version had instrumental callbacks in the final chorus that echoed the introduction. The competing tool’s version felt like three separate songs awkwardly merged.

The Learning Curve Nobody Mentions

Here’s what the marketing materials don’t emphasize: getting good results requires developing a new skill—prompt crafting for music. It’s not difficult, but it’s not automatic either.

The Evolution of Effective Prompting

| Phase | Approach | Typical Results | Key Insight |

| Days 1-3 | Vague descriptions (“upbeat pop song”) | Generic, forgettable outputs | Specificity matters enormously |

| Days 4-7 | Over-detailed prompts (“120 BPM pop with synth leads, vocal harmonies, bridge breakdown…”) | Confused, inconsistent generations | Too much direction overwhelms the model |

| Days 8-14 | Balanced prompts focusing on mood + 2-3 musical elements (“melancholic indie rock with acoustic guitar and building drums”) | Consistently interesting, usable results | Sweet spot exists between vague and overwhelming |

By the second week, an intuition develops for what works. Emotional descriptors perform better than technical specifications. “Anxious energy with staccato strings” yields more interesting results than “140 BPM with violin ostinato pattern.”

Real-World Testing: Five Scenarios, Five Outcomes

Scenario 1: YouTube Background Music

Task: Create non-distracting instrumental for a 3-minute tutorial video.

Prompt: “Soft ambient electronic, minimal percussion, optimistic but not distracting”

Result: Surprisingly perfect on the second attempt. The first generation had a melodic hook that was too catchy (viewers would focus on the music instead of content). The second was exactly the “sonic wallpaper” needed—present but unobtrusive.

Scenario 2: Songwriting Demo

Task: Hear written lyrics with actual production to assess if the concept works.

Prompt: Full lyrics about urban isolation, requested “downtempo R&B with sparse production”

Result: The vocal delivery revealed that the second verse was too wordy—syllables crashed into each other awkwardly. This wasn’t a failure of the tool; it was valuable feedback about the lyrics themselves. Adjusting the verse structure and regenerating produced something genuinely moving.

Scenario 3: Podcast Intro Music

Task: 30-second energetic opener that establishes brand identity.

Prompt: “High-energy indie rock intro, building drums, anthemic feel, 30 seconds”

Result: Here’s where limitations appeared. Diffrhythm generates minimum segments that don’t always align with precise duration needs. The output was 45 seconds, requiring manual editing to trim. Still usable, but not the seamless workflow hoped for.

Scenario 4: Genre Experimentation

Task: Explore what “folk-electronic fusion” might sound like without investing studio time.

Prompt: “Acoustic folk melodies with subtle electronic textures, organic meets synthetic”

Result: Fascinating and unexpected. The model interpreted this as fingerpicked guitar with ambient synth pads and glitchy percussion accents. It opened creative directions that wouldn’t have been considered otherwise. This is where Diffrhythm AI shines—as an ideation partner.

Scenario 5: Client Presentation Mockups

Task: Create three different musical directions for a brand pitch, quickly.

Prompt: Same lyrics, three style variations: “corporate uplifting,” “indie authentic,” “electronic modern”

Result: Generated all three in under five minutes total. Were they final-production ready? No. Did they effectively communicate distinct sonic identities to the client? Absolutely. This speed-to-concept ratio is genuinely game-changing for creative professionals.

Diffrhythm vs. Traditional Workflow: The Honest Comparison

| Aspect | Diffrhythm Approach | Traditional Production | The Reality |

| Time Investment | 30-60 seconds per generation | Hours to days per song | Diffrhythm wins for demos/concepts; traditional wins for polish |

| Skill Barrier | Prompt writing (learnable in days) | Music theory, production technique (years to master) | Dramatically more accessible |

| Creative Control | High-level direction only | Granular control over every element | Trade-off depends on your needs |

| Output Quality | 70-80% “good enough” rate | Consistent professional quality (with expertise) | Diffrhythm requires iteration tolerance |

| Cost | $7-59/month subscription | Studio time, equipment, software ($1000s) | Massive cost advantage for certain use cases |

The comparison isn’t really fair because they serve different purposes. Diffrhythm music isn’t trying to replace Abbey Road Studios. It’s trying to give non-musicians access to musical expression, and give musicians a rapid prototyping tool.

The Uncomfortable Truths

Let’s address what enthusiastic reviews often gloss over:

Inconsistency is real. Some generations are remarkably good. Others are bafflingly off-target despite identical prompts. This randomness can be frustrating when working against deadlines.

Vocal pronunciation quirks exist. Complex words or rapid lyrical delivery sometimes produce unnatural syllable emphasis. You’ll occasionally get “em-PHA-sis on the wrong syl-LA-ble” moments.

Genre boundaries get blurry. Request “jazz” and you might get smooth jazz, bebop, or jazz-adjacent pop depending on how the model interprets context. Specificity helps but doesn’t guarantee precision.

You’re not getting radio-ready masters. The audio quality is good, sometimes very good, but professional ears will notice compression artifacts and mixing choices that wouldn’t pass in commercial releases.

Who Benefits Most (And Who Doesn’t)

Ideal Users:

- Content creators needing original music without licensing nightmares

- Songwriters wanting to hear lyrics in context before studio investment

- Marketing teams exploring sonic branding directions quickly

- Educators demonstrating musical concepts

- Hobbyists experimenting with musical ideas

Probably Not Ideal For:

- Professional musicians seeking release-ready tracks

- Projects requiring precise musical notation or specific arrangements

- Situations demanding absolute consistency across multiple generations

- Anyone expecting zero learning curve or first-try perfection

The Bigger Question: What Does This Technology Mean?

Diffrhythm AI Music represents something larger than just another AI tool—it’s a glimpse into democratized creativity. For decades, musical expression required either innate talent plus years of training, or significant financial resources to hire those who had both. This technology doesn’t eliminate the value of expertise (great musicians will always create things AI cannot), but it does lower the barrier for people who simply want to hear their ideas come to life.

The results won’t always be perfect. You’ll generate songs that miss the mark. You’ll iterate more than you’d like. But somewhere in that process, you’ll also generate something that surprises you—a melody you didn’t expect, a vocal delivery that captures exactly the emotion you were chasing, an instrumental texture that opens new creative doors.

That’s the real value proposition: not perfection, but possibility. Not replacement, but augmentation. Not the end of human creativity, but a new tool in its service.

After two weeks of intensive testing, the verdict isn’t “Diffrhythm AI music is revolutionary” or “it’s overhyped nonsense.” It’s something more measured: this technology is genuinely useful for specific applications, imperfect in predictable ways, and improving at a pace that suggests today’s limitations may be tomorrow’s footnotes.

The question isn’t whether AI will transform music creation—it already is. The question is whether tools like Diffrhythm will empower more people to participate in that creation, or simply add noise to an already crowded landscape. Based on these two weeks of real-world use, the answer leans cautiously toward the former.

-

Celebrity1 year ago

Celebrity1 year agoWho Is Jennifer Rauchet?: All You Need To Know About Pete Hegseth’s Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Mindy Jennings?: All You Need To Know About Ken Jennings Wife

-

Celebrity1 year ago

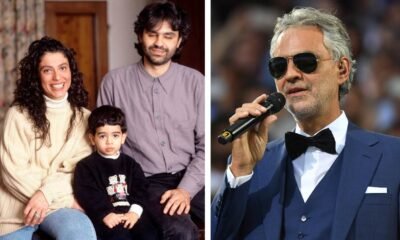

Celebrity1 year agoWho Is Enrica Cenzatti?: The Untold Story of Andrea Bocelli’s Ex-Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Klarissa Munz: The Untold Story of Freddie Highmore’s Wife