Tech

Can AI Turn 2D Images into Interactive 3D Assets?

Artificial Intelligence is rapidly reshaping industries—from content creation to medical imaging. One area gaining tremendous traction is the use of AI to convert flat, two-dimensional visuals into immersive, interactive three-dimensional models. But can AI really turn 2D images into fully usable 3D assets? The short answer: yes, and it’s already happening.

Before diving deeper, let’s explore how this transformation from image to 3D is made possible and where it’s being applied.

The Rise of AI in Visual Computing

AI’s rapid evolution in visual information comprehension has thus enabled the commencement of this transformation. Artificial Intelligence has the capability to only reconstruct 3D views previously to a neural network which is able to analyze the depth or even geometry and texture of a 2D image and solve this task. Image to 3D technique is not only theoretical and is being used in industries like gaming, virtual reality, and architecture. These image to 3D conversion methods are not merely experimental—their applications are already in use in sectors like gaming, virtual reality, and architecture.

From Pixels to Polygons: The Magic of AI Mapping

Could one expect turning a 2D image into a 3D model to be the realm of a science fiction writer? I do not think so—the technology is real.

AI image to 3D software usually uses CNN (Convolutional Neural Networks) or transformer models. These systems are trained with big datasets of 2D vs 3D image pairs. When the model is ready, the AI can interpret shapes, estimate depth, and produce a point cloud or mesh representation with just one picture. Then, additional AI layers can apply materials like textures and improve lighting or surface quality.

Applications Across Multiple Industries

This feature is not only a bookmark for high-tech wizardry but a tool that finds application all over. An example being the e-commerce sector, sellers can simply take a picture of the products and then use the picture as a 3D tool for creating an interactive model that buyers view from all possible angles. In medicine, radiologists are employing AI to convert 2D X-rays into 3D anatomical models that not only simplify the diagnosis but also help in the planning of the surgery. The technology has even been used at museums to digitize artifacts and showcase them on the internet.

Challenges in Achieving True Interactivity

Creating a two-dimensional image into an interactive 3D asset would be possible but not always that simple. The procedure of using an image to generate a 3D model has its difficulties, such as occlusion when the visible parts of the object are only little, and material ambiguity, the AI has to guess the unseen surface. While the AI has the capability of relying on the data of similar surfaces, the end result might not always be perfect. Besides shapes, interactivity must include realistic motion, lighting response, user manipulation, that is, it is much more complex.

The Role of Datasets and Training

The fundamental part of any AI system to be successful is data. In order to render high-volume 3D outputs images to 3D transformations need to train on diversified datasets that cover various object types, lighting conditions, and angles. This foundational data has been mainly provided by projects like ShapeNet and Pix3D. The less diverse or detailed datasets are reflected in less accurate or less realistic 3D outputs.

Tools and Platforms Leading the Charge

A wide variety of companies and platforms are opening up this technology for creators and devs. Image to 3D generation has been rediscovered by Google`s DreamFusion, NVIDIA`s Instant NeRF, and also by numerous startups including Kaedim and Luma AI that strive to push forward the limits. A few of the tools are so simple that the user can just drag and drop a 2D image to receive a navigable 3D model in a very short time period. These platforms are quickly dismissing the technical hurdles that once upon a time made 3D modeling an experts’ playground only.

What the Future Holds for AI and 3D Modeling

Looking to the future, the implications are impressive. The process of converting images to 3D, getting shorter and more accurate, will make a big difference in the scope of personalized, real-time 3D content. This may change the online shopping experience as well as the training of professionals in virtual reality. In the end, artificial intelligence could even be able to create 3D environments using multi-2D elements like hand drawings, videos, or even audio.

Conclusion: The 3D Revolution is Just Beginning

As we move more and more towards immersive and interactive experiences, imagine being able to turn a flat image into a dynamic, 3D model. It will be a complete change of game, which is also made possible mainly by the advancements seen in AI. This image to 3D conversion technology is not just a future possibility; it is already a reality and is changing the way we visualize, interact, and create. Upgrade of the equipment and the decrease of the barriers will bring the once-niche toolbox to the level of the standard one in creating digital content.

-

Celebrity1 year ago

Celebrity1 year agoWho Is Jennifer Rauchet?: All You Need To Know About Pete Hegseth’s Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Mindy Jennings?: All You Need To Know About Ken Jennings Wife

-

Celebrity1 year ago

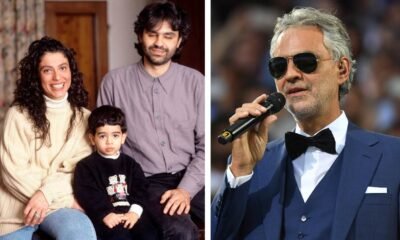

Celebrity1 year agoWho Is Enrica Cenzatti?: The Untold Story of Andrea Bocelli’s Ex-Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Klarissa Munz: The Untold Story of Freddie Highmore’s Wife