Technology

Best Practices for Migrating Data from MySQL to BigQuery

Migrating data can be an essential step for organizations looking to leverage more advanced analytics and scale their operations. BigQuery offers benefits like faster queries, better scalability, and deeper integration with Google Cloud tools, making it an attractive choice for businesses with growing needs.

However, this migration process requires careful planning to avoid information loss and ensure that performance is optimized post-migration. By following best practices and choosing the right tools, businesses can make a seamless transition from MySQL to BigQuery and maximize the advantages BigQuery offers.

Why Migrate from MySQL to BigQuery?

MySQL is a popular relational database management system that has served businesses well for many years. However, as volumes grow and analytics become more complex, MySQL can become challenging to scale and manage. BigQuery, Google’s fully managed data warehouse, is designed to handle large-scale datasets with high-speed querying capabilities. Organizations that rely on real-time analytics and massive sets find BigQuery more capable of managing their workloads.

The primary reasons to migrate from include:

- Scalability: BigQuery can handle petabytes of information without compromising on performance, offering unmatched scalability for large datasets.

- Speed: BigQuery is optimized for fast queries, which helps businesses gain insights from their information quickly.

- Integration: BigQuery integrates seamlessly with other Google Cloud services like Google Analytics, Cloud Pub/Sub, and AI tools, enabling advanced analytics.

Choosing the Right Tools and Techniques

When migrating from BigQuery, choosing the right tools and techniques is crucial for ensuring a smooth process. Organizations can either use third-party integration tools or manually handle the migration using an ETL (Extract, Transform, Load) process. The selection of the right method largely depends on the complexity of the migration, the size of the data, and the level of customization required. A key aspect of this process involves managing it efficiently, such as employing dataflow MySQL to BigQuery to automate the transfer, minimizing manual input, and reducing errors.

Using Hevo Data to Connect MySQL to BigQuery

Hevo Data is a popular choice for organizations looking to simplify their migration. It offers an automated, no-code platform that can handle the extraction of information from MySQL, transform it, and load data from MySQL to BigQuery without requiring manual intervention. This tool supports real-time migration, ensuring that businesses don’t experience downtime or inconsistencies during the process. Additionally, Hevo Data provides robust monitoring features to track the status of the migration and address any issues as they arise.

The benefits of using Hevo Data include:

- No need for complex coding or setup.

- Built-in data validation and monitoring features to ensure a smooth migration.

- Fast, reliable, and secure transfer.

Manual ETL Process to Connect MySQL to BigQuery

For those with more complex requirements or tighter control over the migration process, a manual ETL process may be the preferred choice. This involves extracting data from MySQL, transforming it into a compatible format for BigQuery, and then loading it into the new system. While this process can be more time-consuming and technically demanding, it provides full flexibility and control over how information is handled.

Key steps in a manual ETL process include:

- Extracting data from MySQL in small chunks to avoid large-scale disruptions.

- Cleaning and transforming information to fit the structure of BigQuery.

- Loading the transformed information into BigQuery, while ensuring compatibility with schema changes.

Optimizing Post-Migration Performance

Once the migration is complete, the focus should shift to optimizing BigQuery’s performance. It’s important to ensure that the queries are running efficiently, and the system is properly configured to handle future data growth. Some best practices for optimizing BigQuery performance include:

- Partitioning and Clustering: Partitioning tables by time or another logical key can significantly improve query performance by reducing the amount of information scanned during each query. Clustering allows those with similar values to be stored together, making it easier to access.

- Data Modeling: Ensure the data is modeled efficiently for BigQuery, considering the differences between relational databases like MySQL and columnar storage like BigQuery.

- Query Optimization: Writing efficient queries that reduce the number of resources used is essential. Avoiding full table scans and optimizing joins can drastically reduce query costs.

Key Challenges and How to Overcome Them

Migrating data from BigQuery is not without its challenges. Some common issues organizations face include inconsistencies, performance bottlenecks, and integration difficulties. However, with careful planning and the right tools, these challenges can be overcome.

Key challenges include:

- Data Transformation: MySQL’s schema might not directly map to BigQuery’s schema. Ensuring proper transformation before it is loaded is critical to avoid loss or inconsistencies.

- Downtime: Migration can lead to temporary downtime if not handled correctly. Using real-time replication tools like Hevo Data can minimize this risk.

- Cost Management: BigQuery charges based on the amount of information scanned during queries, so it’s important to optimize both the structure and the queries to avoid excessive costs.

Why Choose Hevo Data for Migration?

Hevo Data stands out as an ideal tool for migration due to its ease of use, speed, and reliability. Unlike traditional ETL tools, Hevo provides a no-code solution, which means less manual intervention and faster setup. The tool’s real-time capabilities also ensure that businesses can migrate data with minimal disruptions, reducing the risk of downtime.

Moreover, Hevo offers an intuitive interface that allows users to monitor migration progress and resolve any issues quickly. By automating the process and offering built-in error handling, Hevo Data ensures that businesses can successfully migrate large volumes of information without worrying about manual errors or performance slowdowns.

Conclusion

Migrating data from MySQL to BigQuery is a strategic decision that can greatly enhance an organization’s ability to manage and analyze at scale. By selecting the right tools, like Hevo Data, and following best practices for migration and post-migration optimization, businesses can successfully transition to BigQuery with minimal disruption. Though challenges exist, they can be mitigated with proper planning and the right migration tools, leading to long-term benefits such as improved performance, scalability, and better decision-making capabilities.

-

Celebrity1 year ago

Celebrity1 year agoWho Is Jennifer Rauchet?: All You Need To Know About Pete Hegseth’s Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Mindy Jennings?: All You Need To Know About Ken Jennings Wife

-

Celebrity1 year ago

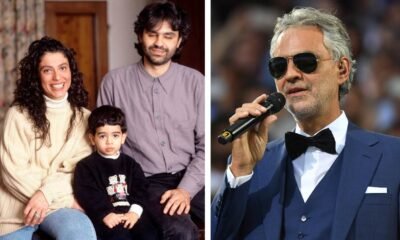

Celebrity1 year agoWho Is Enrica Cenzatti?: The Untold Story of Andrea Bocelli’s Ex-Wife

-

Celebrity1 year ago

Celebrity1 year agoWho Is Klarissa Munz: The Untold Story of Freddie Highmore’s Wife